Get up and running fast with Terraform & Google Kubernetes Engine

Get up and running fast with infrastructure-as-code on Google Kubernetes Engine with Terraform. Use this template to get started and build out your application!

Kubernetes is a popular container orchestration engine, but it’s hard. Especially for newcomers. If you’re used to running a bunch of virtual machines and then connecting them together to get your app running, and then worrying about keeping those machines patched, maintained and ensuring the development environments are consistent, then you’re going to have a bad time. Containers have changed so much about how software is developed, tested and deployed and has reduced so much cognitive burden and knowledge which is often locked away in the minds of people/staff who just “know how to run” the organisation’s applications on a server “somewhere”. This post is aimed at removing much of that burden and moving the locked-away knowledge to documentation by introducing concepts such as infrastructure-as-code and automation.

Resources

Terraform is a software package by Hashicorp which helps to manage infrastructure in the cloud by using a declarative templating language which is compiled and converted into API calls by the toolchains from the various providers. In the context of Kubernetes, I have created a base starting point which can be used and expanded on which I wanted to share. Let’s get stuck into it.

The repo is available here and has a number of configuration files of which some will need to be customised for the deployment. Go clone the repo and then we can get started.

Getting setup

The first step is to sign into your Google Cloud account and in the IAM section, create a service account which will have enough permissions to complete the tasks required for deploying the infrastructure. Taking the approach of the least privilege principle will help reduce the risk of too much power over your GCP resources (and by extension, your own resources - aka your cash!) being mis-used or stolen if it got into the wrong hands. Plus it will make any security and finance people in your organisation happy. Once you’ve created the service account and downloaded the JSON file which contains the authentication information, it’s time to update the terraform.tfvars file which exists at the root of the repo. Configure the two lines with your GCP project and point to the correct JSON file you’ve just downloaded.

Deploy the cluster

Next up ensure terraform is installed and then run a terraform init command, to initialise terraform in the repository if you haven’t already. Once done, you’ll be able to run terraform plan command. This will spit out all the changes that terraform will do for you with respect to the cloud infrastructure it will create. It’s worth reviewing this to see everything and be fully aware of what will be done for you. If you’re ready you can run terraform deploy which will deploy all of the resources outlined in the previous step. Let’s do that now. The process will take around 8 minutes to create the cluster, network and machines before we can start issuing commands to the cluster.

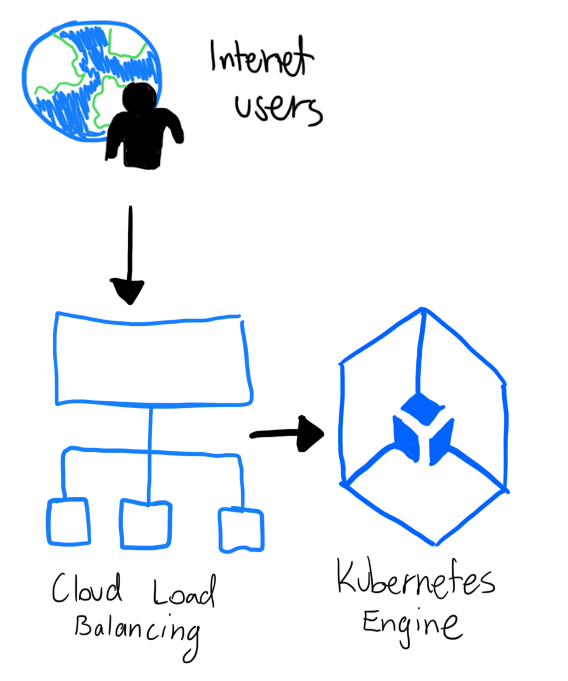

Here’s a basic architecture diagram of what we will end up with once everything is deployed and kubernetes is configured.

Understanding the terraform files

Whilst the resources are being provisioned, we can take a look at each of the files in the repository to see how it all fits together. Let’s start with the variables.tf file.

You’ll see things like:

variable "initial_node_count" {

default = 1

}

variable "region" {

default = "australia-southeast1"

}

variable "machine_type" {

default = "n1-standard-1"

}In short this variable file allows us to declare variables that we can use in our infrastructure, should we want to use them in multiple places.

Many configurations in GCP require a region flag for example. Since we’re providing a default flag here, it means that by default we will put our resources in the australia-southeast1 region. We could put other things here, like for example instead of default, we could use united-states and then set a value of us-central1 or whatever we wanted.

You don’t have to provide these defaults, but it’s a good idea to have a standard, which you can then tweak in the actual resource you declare if you need or just avoid putting in a declaration at all if you want to use that value everywhere in your app.

Next up we have the main.tf file which is the entry point for all the infrastructure declarations. In this case, we’re just using one file since our infrastructure is relatively straightforward and doesn’t use too many resources. Normally it makes sense to configure things like VMs, networking, IAM, and so on in separate resource files, so it’s clear and logical to understand.

In the provider block, we’re declaring that google is the cloud provider for our infrastructure. Terraform can also be used with other clouds, so if you wanted to build out templates for them, you’d change the value here.

provider "google" {

credentials = file(var.credentials_file)

project = var.project

region = var.region

zone = var.zone

}This block is pretty self explanatory, so I won’t go into too much detail here.

The two other blocks contain the infrastructure declaration we need to run our app. Take a look at the resources here. Let’s start with the node pool. We start by passing in a variable at the block declaration, default, which explicitly tells the block that we are giving it a label which we can use in other places. One such example is is with the container cluster on line 32. We can see there we are referencing the above block with the following syntax: resource.label. In our case, it is: google_container_cluster.default. We want to assign the value of whatever the cluster’s name ends up being to the container node pool resource, we’re declaring here. This tells terraform that in order to create the resource block that the cluster must exist first as we are using it’s values in another resource.

Some of the other configuration options you may recognise if you’re familiar with GCP. For example, in this case, we’re making use of pre-emptible VM’s and giving some oauth scopes to the node pool, such as monitoring and the ability to write logs to stackdriver.

The only other thing I’d like to point out at this point is in the google_container_cluster resource, that we are removing the default node pool that is automatically created when the cluster is created. This is because we want to use our own node pool of pre-emptible VMs and so we remove the nodes once the cluster has been created in order to not waste resources and stick with the configuration we want.

Kubectl do the thing

If you’re new to kubernetes, then kubectl won’t be a tool you’ve had the joy of using yet, but will very soon. It allows the user to use declarative yml templates to outline how to run workloads inside kubernetes, and also enables the use of configuration of networking, secrets, load balancing, traffic splitting, amongst many others. In our example we’ll be focusing on creating a service, a deployment and an ingress.

Let’s take a look at the service file.

apiVersion: v1

kind: Service

metadata:

name: example

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

run: example

type: NodePortThis specification creates a new Service object named “example” in the default namespace, which targets TCP port 80 on any Pod with the example label.

This is a good segue into the deployment.yml file. From the kubernetes docs:

A Deployment provides declarative updates for Pods and ReplicaSets. You describe a desired state in a Deployment, and the Deployment Controller changes the actual state to the desired state at a controlled rate. You can define Deployments to create new ReplicaSets, or to remove existing Deployments and adopt all their resources with new Deployments.

apiVersion: apps/v1

kind: Deployment

metadata:

name: example

namespace: default

spec:

selector:

matchLabels:

run: example

template:

metadata:

labels:

run: example

spec:

containers:

- image: nginx:latest

imagePullPolicy: IfNotPresent

name: example

ports:

- containerPort: 80

protocol: TCPThis file says how we want to run the service we just created. The deployment example is created as indicated by the metadata.name field. The .spec.selector field defines how the Deployment finds which Pods to manage. In this case, you simply select a label that is defined in the Pod template (spec.selector.matchLabels.run: example).

The spec.container here specifically talks to the container you want to run and it’s configuration. In this case, we’re just pulling in the latest nginx container for our demo to prove the concept out.

Side note, if you wanted to have more than one container serving traffic in your cluster, you could amend the spec at the top level and add the key replicas in, with a value of your choosing. For example if you wanted 5 containers of your app running you could do: replicas: 5 like so:

apiVersion: apps/v1

kind: Deployment

metadata:

name: example

namespace: default

spec:

replicas: 5

...Finally we’ve got our ingress.yml file which tells kubernetes how we can reach our application from the outside world. This will also conveniently create a load balancer for us on google cloud which will give us a public IP address, so public internet traffic can start to reach our app!

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: example-ingress

spec:

backend:

serviceName: example

servicePort: 80Here you can see that we’ve specified an ingress rule for port 80 to our service which we talked about first. It’s extremely straightforward.

Configuring our cluster

By now your cluster may have completed deployment, if not you may be a speedy reader and it’s not quite done yet! Once terraform has reported that your cluster is ready, then we need to configure it!

The tool I referred to earlier, kubectl is about to get some use. If you haven’t already, go install it, and whilst you’re at it, if you haven’t already install the gcloud command tools. Once installed, you’ll need to authenticate to the cluster. To do that, run the following command:

gcloud container clusters get-credentials sample-clusterYou’ll get a confirmation message, then you should be able to connect with kubectl. To verify it works, run

kubectl cluster-infoIf that works, then you have command-line access to the cluster and should be able to issue commands. To get our app running we need to apply the three configurations we discussed in the last section.

kubectl apply -f app/service.yml

kubectl apply -f app/deployment.yml

kubectl apply -f app/ingress.ymlYou’ll get confirmation messages that they’ve been applied. Now the kubernetes control plane is going about applying those desired configurations and creating the load balancing for you.

If you pop into the console and click through to the kubernetes engine, you’ll see the running cluster and that it’s bringing up the pod(s) and creating the service and load balancer. If you view the load balancer section in the console you’ll be given a public IP address. Once the service is deployed you’ll be able to see your app running!

All done!

Cleaning up

If you were running through the above to get your hands dirty but don’t need the infrastructure we just created, then you can destroy everything quite easily! Simply run terraform destroy in your terminal, and you’ll get a confirmation from terraform that you want to delete the resources you’ve declared. Confirming this will tear everything down.